When you are faced with the information you want to scrape from the web, do you have questions about how to do it? What is certain is that large-scale scraping of data from websites is the best way to obtain information, helping you to achieve your work goals quickly and accurately. But there are also many problems.

Python is one of the simplest ways to get started. It is suitable for a variety of scenarios and can meet all your needs from simple to complex. It has many libraries, and it is not difficult to build a web scraping tool in Python.

This article will explore the legality of web scraping, introduce you to what kind of data you can scrape, and provide specific operations for scraping tables with Python.

Is web scraping legal?

Web scraping tools themselves are not illegal. They are automated programs used to extract information from public web pages. The legality of web scraping depends on how you use it. If it infringes on the legal rights of others, it may constitute a violation of the law.

Copyright infringement: If the scraped content (such as text, pictures, videos) is protected by copyright, unauthorized use may constitute infringement.

Privacy infringement: Scraping data containing user personal information (such as name, contact information) may violate privacy protection laws.

Violation of website agreement: Many websites explicitly prohibit automated crawling in their user agreements. Violation of these terms may result in legal liability.

Unfair competition: Obtaining competitive advantage by crawling competitors' business data may be considered unfair competition.

Scenarios where web scraping can be applied

Price monitoring and dynamic pricing

E-commerce platforms scrape prices of competing products and adjust their pricing strategies in real time (such as Amazon seller tools).

The tourism industry monitors price fluctuations of hotels and air tickets.

Market trend prediction

Scrape hot topics and search keywords on social media to predict consumption trends.

Analyze job requirements on recruitment websites and predict talent gaps in the industry (such as LinkedIn industry reports).

Competitive product analysis

Scrape product descriptions and user reviews on competitor websites to optimize product design.

Monitor competitors' advertising strategies (such as Google Ads keyword crawling).

Data collection and research

Scrape public paper databases (such as arXiv, PubMed) for bibliometric analysis.

Collect public data sets such as climate and economy for modeling research.

Language processing and AI training

Build corpus to train natural language processing models (such as the early data source of ChatGPT).

Scrape multilingual web content and train translation systems.

Search engine optimization (SEO)

Scrape search engine results page (SERP) to analyze ranking factors and optimize website SEO.

Monitor technical indicators such as website dead links and loading speed (such as Ahrefs, SEMrush tools).

Construction of aggregation platforms

News aggregation apps (such as Flipboard) crawl content from multiple media, classify and integrate it for push.

Price comparison websites (such as Google Shopping) aggregate product information from different e-commerce platforms.

Automation tool development

Develop recruitment information aggregation tools.

In the financial field, scrape financial report data of listed companies and generate visual reports (such as Wind Information).

Web scraping tables tools

Web scraping tables tools can be divided into two categories: libraries and tools based on programming languages, and visual code-free tools. The following are some commonly used tools and libraries for different needs and skill levels.

1. Programming language tools

Python is one of the most commonly used languages for web scraping, and provides multiple powerful libraries for scraping and parsing web tables.Use BeautifulSoup + and requests. BeautifulSoup is used to parse HTML and extract table data; requests is used to send HTTP requests to obtain web page content. Suitable for static web pages, where table data is directly embedded in HTML.

Pandas provides a read_html() method that can read table data directly from a web page. Suitable for simple table scraping without manually parsing HTML.

Selenium is used to simulate browser behavior and is suitable for dynamically loaded web pages (such as tables rendered by JavaScript). Suitable for dynamic web pages and web pages that require login or interaction.

JavaScript can also be used for web scraping, especially when it needs to run in a browser environment. Use Puppeteer. The Node.js library is used to manage Chrome or Chromium browsers and is suitable for dynamic web scraping. Suitable for dynamically loaded tables and web pages that need to simulate user interaction.

2. Visualization tools

Octoparse is a powerful web crawler that supports dynamic and static web crawlers and provides a variety of data export formats (such as CSV, Excel, JSON). It is suitable for users who need to crawl large amounts of data regularly and supports custom crawling rules. Its visual operation interface supports proxy servers and can handle complex web page structures.

ParseHub supports dynamic web pages and table crawling, and can customize crawling rules. It is suitable for beginners and users who need to crawl data quickly. It can crawl multiple pages and export to multiple formats. It provides free and paid versions.

WebScraper.io is a crawler based on browser extensions that supports crawling web tables directly in the browser. It is suitable for simple web table crawling and suitable for non-technical users. It is simple to operate and supports exporting to formats such as CSV and JSON.

Import.io provides web data crawling and API generation services, supporting multiple data types such as tables and text. It is suitable for users who need to use crawled data for business analysis. For custom crawling rules, data cleaning and update functions are provided.

How to use Python to scrape the website tables?

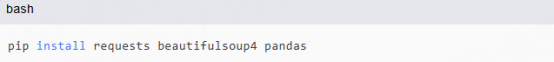

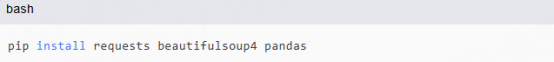

1. Install necessary libraries

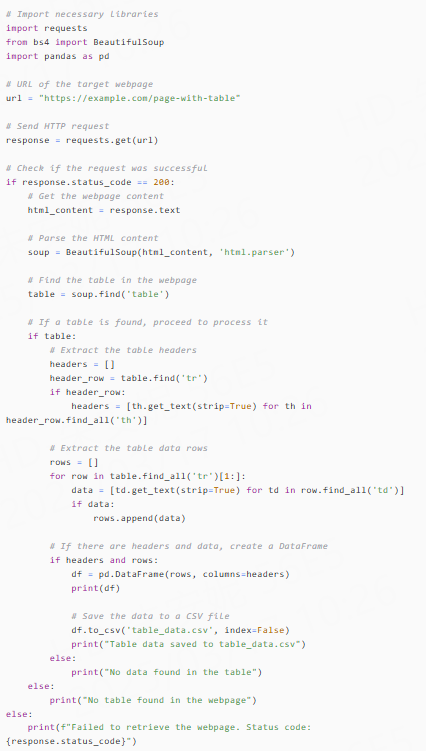

You need to install the requests library to send HTTP requests, the BeautifulSoup library to parse HTML pages, and the pandas library to process table data.

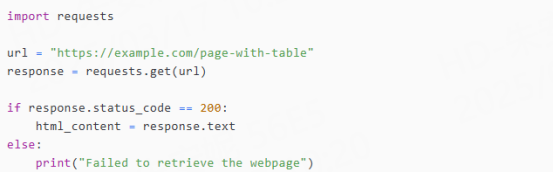

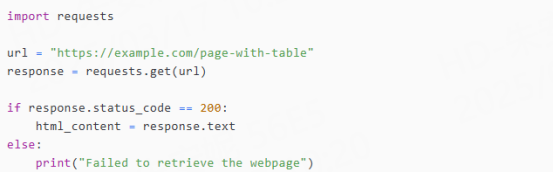

2. Send HTTP requests to get web page content

Use the requests library to send a GET request to the target website to get the HTML content of the web page.

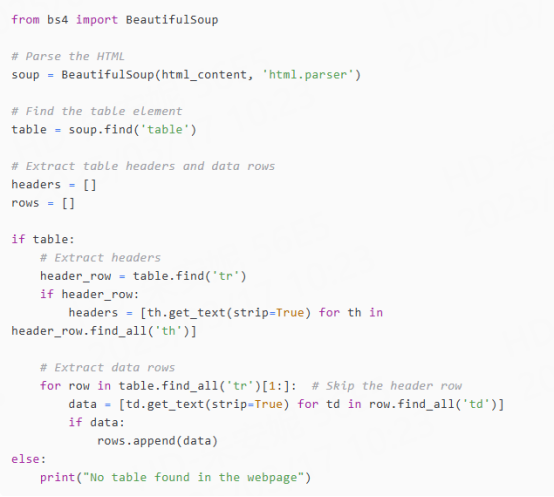

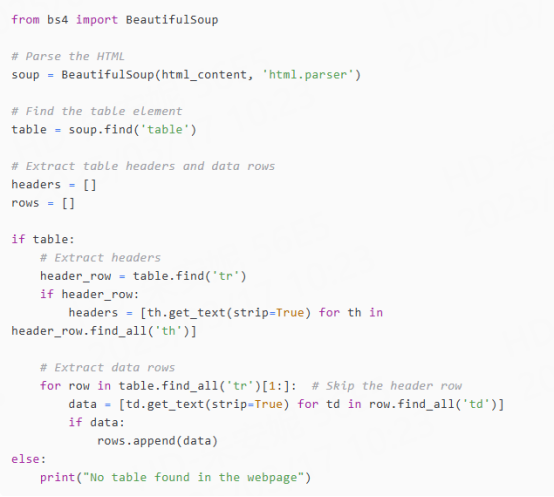

3. Parse HTML and extract table data

Use BeautifulSoup to parse HTML content, find table elements and extract data from them.

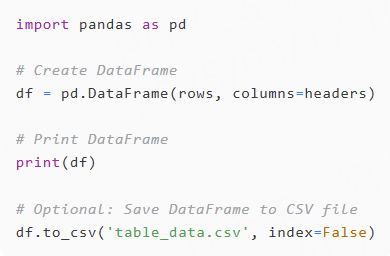

4. Store data in a suitable data structure

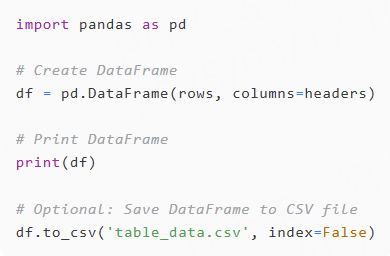

The extracted table data can be stored in a data structure such as a list or dictionary, or directly updated to a DataFrame using the pandas library for subsequent processing and analysis.

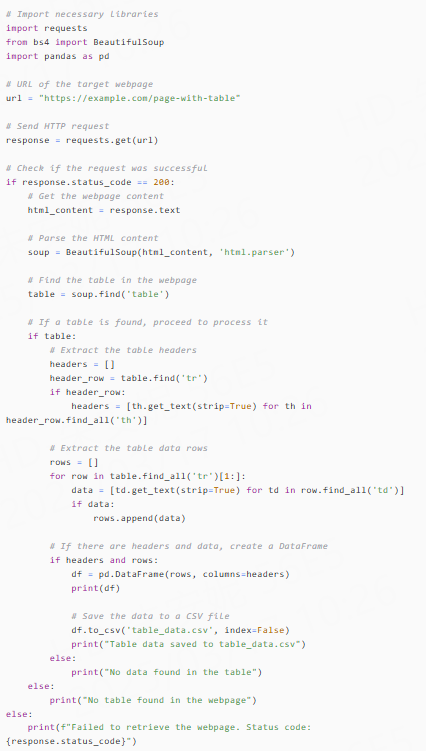

Complete code example

The following is a complete example code showing how to crawl table data from a web page and save it as a CSV file:

Common errors

When using Python to crawl tables on a web page, you may encounter some common errors. To avoid these problems, you need to pay attention to the following points:

HTTP status code error

Server error: such as 500 (internal server error), 502 (wrong gateway), 503 (service unavailable), etc. These errors are usually server-side problems and may be temporary.

Client error: such as 403 (access not allowed), 429 (too many requests), etc. These errors indicate that the request was rejected by the server.

Parsing error

Website structure changes: The website may update its HTML structure, causing the original parsing code to become invalid.

Dynamic content loading: Some websites use JavaScript to dynamically load data, and directly crawling HTML may not be able to obtain the complete content. Use tools such as Selenium or Puppeteer to render JavaScript, and ensure that dynamic content is loaded before crawling.

Non-standard HTML tags: The website may have non-standard HTML tags, resulting in parsing failure. Use a more fault-tolerant parsing library such as BeautifulSoup.

Detected and not allowed by the website

Default User-Agent: Using Python's default User-Agent may be identified as a crawler by the website.

Request frequency is too high: Frequent requests may cause IP to be disallowed.

Unused sessions: Creating new connections multiple times may be identified as abnormal behavior.

CAPTCHA verification

Triggering CAPTCHA: If the crawler behavior is identified as abnormal by the website, CAPTCHA may be encountered. You can request frequency or update the proxy IP.

Legal and ethical issues

Comply with website policies: Make sure to comply with the target website's `robots.txt` file and terms of use.

Transparent identity: Add contact information in the request header so that the website administrator can contact you if there is a problem.

By following the above best practices, you can effectively reduce the errors encountered during the crawling process and improve the stability and efficiency of the crawling.