Residential Proxies added 300,000 US IP on April 9th.

AI web data scraping exclusive proxy plan [ Unlimited traffic, 100G+ bandwidth ]

Residential Proxies added 300,000 US IP on April 9th.

AI web data scraping exclusive proxy plan [ Unlimited traffic, 100G+ bandwidth ]

Language

Language

Proxies

Earn Money

Referrals Program

Reseller Program

Local Time Zone

This article will provide a step-by-step tutorial on how to use Python to scrape Amazon web pages.

1. Preparation

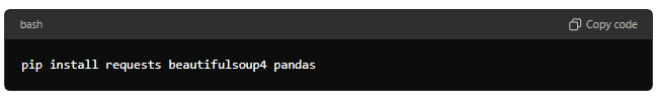

Before you start scraping, make sure you have installed the following Python libraries:

requests: used to send HTTP requests.

BeautifulSoup: used to parse HTML content.

pandas (optional): used for data processing and storage.

You can install these libraries with the following commands:

2. Send HTTP request

First, you need to send an HTTP request to the Amazon web page to get the web page content. Here is an example code:

In the above code, we use a simulated User-Agent to disguise as a browser, which can reduce the risk of being blocked by the website.

3. Parse web page content

Next, use BeautifulSoup to parse the obtained HTML content and extract the required data:

For example, to extract the name and price of each product, you can use the following code:

4. Process data

The scraped data usually needs further processing and storage. You can use pandas to save the data as a CSV file:

5. Notes

Website structure: Amazon's webpage structure changes frequently, and the scraping code may need to be adjusted accordingly.

Anti-scraping mechanism: Amazon has a strict anti-scraping mechanism, and frequent requests may cause the IP to be blocked. Use delays and proxies appropriately to reduce risks.

Legality: Please follow Amazon's terms of service when scraping data and ensure that the data is used legally.

For your payment security, please verify