Residential Proxies added 300,000 US IP on April 9th.

AI web data scraping exclusive proxy plan [ Unlimited traffic, 100G+ bandwidth ]

Language

Language

1. Why do you need to use a proxy to crawl YouTube data?

When crawling YouTube data, especially when you need to collect large-scale data, using a proxy server is a wise choice. A proxy server can help you hide your real IP address and avoid being blocked by YouTube due to frequent requests. In addition, a proxy can also help you access data in restricted areas and bypass geographic restrictions.

Suppose you are a data analyst who needs to obtain video data worldwide for market analysis. Different countries and regions may have different YouTube content restrictions, and it may be difficult to crawl this data directly. At this time, using a proxy server can help you get data from multiple regions at the same time to ensure the integrity and diversity of the data.

2. Preparation: Install Python and necessary libraries

Before you start crawling data, you need to make sure that Python and related libraries are installed. If you don't have Python installed yet, you can visit the official Python website to install it. Once installed, install the necessary Python libraries with the following command:

· beautifulsoup4: used to parse HTML content.

· requests: used to send HTTP requests.

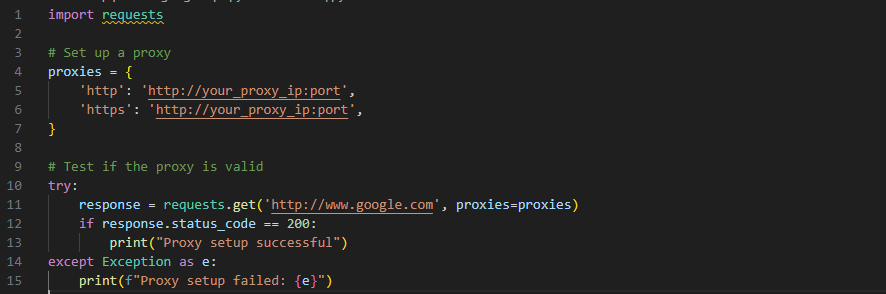

3. Set up a proxy

A proxy server can help you hide your real IP address and avoid being blocked by a website. When you send a request through a proxy, the website will think that the request is sent from the proxy IP instead of your real IP.

In this code, the proxies dictionary is used to store the address of the proxy server. You need to replace your_proxy_ip:port with the actual proxy IP and port.

4. Crawl YouTube pages

Once the proxy is set up, you can crawl YouTube page content through the proxy. Next, we use BeautifulSoup to parse the information of the YouTube video page.

url: Replace with the URL of the YouTube video page you want to crawl.

BeautifulSoup: Converts web page content into a parseable HTML object to facilitate information extraction.

5. Extract more data

In addition to the video title, you can also extract other data, such as video description, upload date, number of views, etc. Here are some sample codes:

These codes use the find method of BeautifulSoup to find specific HTML elements and extract the data in them.

6. Extended functions

If you want to further expand the crawling function, you can consider the following points:

Crawling comment data: Get user comments under the video by parsing the HTML content of the comment area.

Batch crawling: Write a script to crawl data of multiple videos at once and save the results to a file or database.

Data analysis: Use the crawled data for subsequent analysis, such as user behavior analysis, trend prediction, etc.

7. Summary

Through this article, you have learned how to use Python and BeautifulSoup to crawl YouTube data and avoid the risk of IP being blocked through a proxy. Crawl YouTube data can provide you with a rich source of information for various analysis and research.