Residential Proxies adicionou 220.000 IP nos EUA a 23 de abril.

Raspagem de dados da web de IA plano de proxy exclusivo [Tráfego ilimitado, largura de banda de 100 G+]

Language

Language

How to use proxy IP to crawl Google Maps data: a step-by-step tutorial for 2024

1. Benefits of crawling Google Maps

Let's start with "why". Google Maps is already rich and becomes richer with valuable data that is constantly updated. There are restaurants, cafes, bars, supermarkets, hotels, pharmacies, auto repair shops, gyms, historical landmarks, theaters, parks...everything. Google Maps covers almost all categories of interest.

The data extracted from Google Maps can be a key resource for businesses and analysts. It can be used for many applications, such as market research, price aggregation, brand monitoring, competitor analysis, etc. In addition, this rich information can support customer engagement strategies, location planning and service optimization, which helps competitive positioning in various industries.

2. How to crawl

As the world's leading map service provider, Google Maps' rich geographic information and data resources provide developers and enterprises with a wide range of application scenarios.

This article will introduce the detailed steps and methods of how to use proxy IP technology to crawl Google Maps data through Python programming language.

Step 1: Preparation and Environment Setup

Before you begin, make sure you have installed the following necessary tools and libraries in your development environment:

Python programming environment (latest version recommended)

Requests library (for sending HTTP requests)

BeautifulSoup or lxml library (for parsing HTML data)

Proxy IP service (for hiding real IP address)

Step 2: Get the URL of Google Maps data

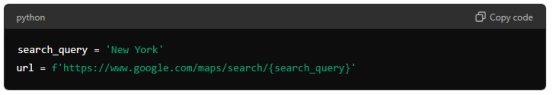

First, determine the Google Maps data you need to crawl, such as the URL of the search results page or the map data of a specific area. For example, we can search for a place and get the URL of its search results page:

Step 3: Send a request with a proxy IP

In order to avoid being detected by Google for too many requests and blocking the IP, we can use a proxy IP service. The following is a sample code on how to send a request using a proxy IP in Python:

Step 4: How to parse HTML data

Use libraries such as Beautiful Soup or lxml to parse HTML data and extract interesting information, such as place name, address, reviews, etc. The following is a simple example to get the place name:

Step 5: Storage and further analysis

Store the crawled data in an appropriate data structure (such as a CSV file or database) for further analysis and application. Data processing and visualization can be performed using libraries such as Pandas to meet specific analysis needs.

3. Choose the most useful proxy IP provider

Global IP network: LunaProxy operates a large network of IP addresses covering 195 countries around the world. The most popular regions are Japan, Germany, South Korea, the United States, and the United Kingdom.

200 million + excellent residential proxies

Expand your business with the world's most cost-effective proxies, easily set up and use 200 million + residential proxies, and connect to country or city-level locations around the world. Enable you to collect public data effectively.

Diversity: LunaProxy offers a variety of proxy types to meet different needs, such as rotating residential, static residential, data center, and ISP proxies.

Flexible plans: LunaProxy offers a variety of plans with different proxy types and pricing options. Customers can choose packages based on the number of IPs or bandwidth according to their needs.