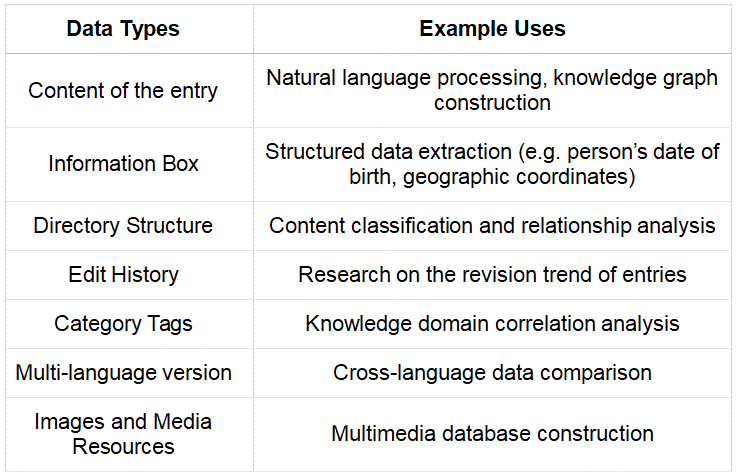

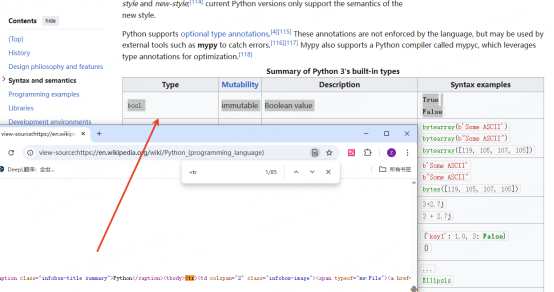

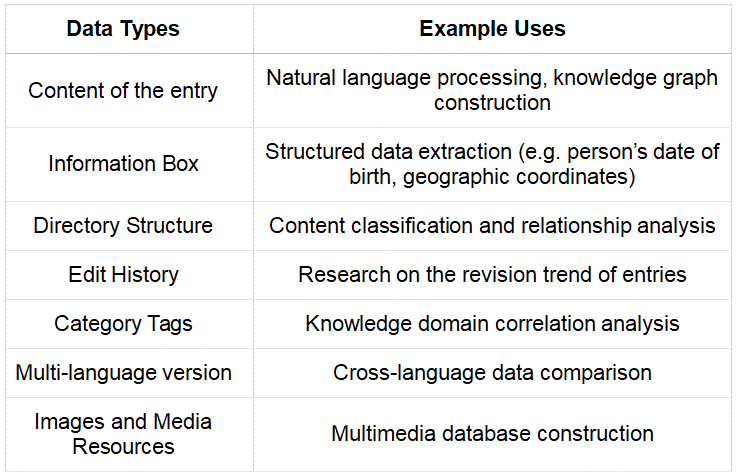

Data types from Wikipedia

As the world's largest free online encyclopedia, Wikipedia has more than 60 million articles in multiple languages. Its structured data and open copyright characteristics make it an ideal object for web scraping. By scraping Wikipedia, you can obtain:

When the above data types are classified and summarized, it can be found that there are four types that can be captured:

Text Data

When scrping text data , it can be used for linguistic research, analyzing the usage habits and vocabulary frequencies of different languages. For example, it can count the distribution of different parts of speech in English articles. It can also be used to build a text corpus and provide training data for natural language processing tasks in machine learning, such as training sentiment analysis models and text generation models.

Tabular Data

People often need tables to perform data analysis, and the captured table data can be used to collect various structured information , such as the timeline of historical events, comparison of economic data of various countries, ranking of sports events, etc., to facilitate subsequent data analysis and visualization.

Image Data

Wikipedia images can be used in machine learning projects related to image recognition , such as training image classification models and object detection models. They can also be used in cultural research and analyzing the evolution of artistic styles in different historical periods. For example, collecting images of paintings from different periods and studying the changes in painting styles.

Linked Data

By analyzing the link structure, we can understand the citation relationship and correlation between articles, which helps to build knowledge graphs and recommendation systems. For example, based on the link relationship between pages, we can recommend other page contents related to the current page.

How to scrape Wikipedia using Python

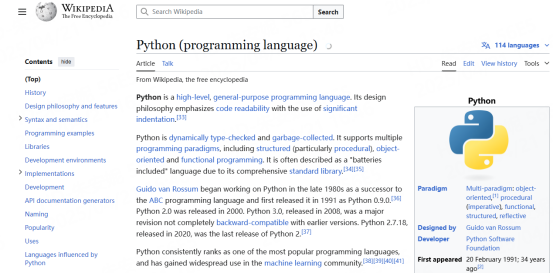

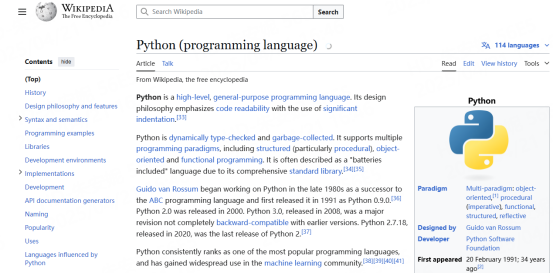

First, determine the Wikipedia page you want to scrpe. The following tutorial will use this page as a reference for scrping https://en.wikipedia.org/wiki/Python_(programming_language)

1. Prepare the environment

Before you start scraping Wikipedia data, you need to prepare the Python environment and install the necessary libraries.

# Create environment (Windows)

python -m venv venv

# Activation (Windows)

venv\Scripts\activate

# Create environment (Mac/Linux)

python3 -m venv venv

# Activation (Mac/Linux)

source venv/bin/activate

Once activated, the terminal should display the (venv) prefix.

pip install requests beautifulsoup4 pandas

requests: used to send HTTP requests (to obtain web page content)

beautifulsoup4: used to parse HTML and extract data

Is the test environment normal?

import requests

from bs4 import BeautifulSoup

url = "https://en.wikipedia.org/wiki/Python_(programming_language)"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

print("Page Title:", soup.title.text)

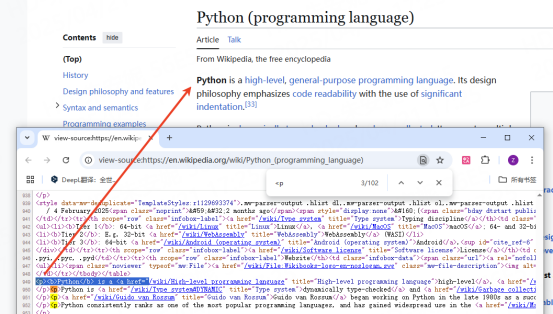

2. Check Page

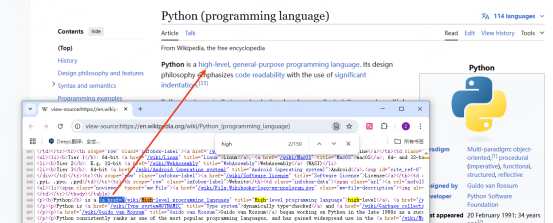

When scrping a Wikipedia page, we need to make sure the page exists. To do this, right-click your cursor and select View Source. If you want to extract all links, you can make sure the tag is:

If you want to extract an image, you can determine the tag location: <img>src

The same goes for extracting data from a table, which helps you collect rows and columns: <table>wikitable

To extract paragraphs, just include the main text content: <p>

Here is a complete code example that can extract and save data from a Wikipedia page:

import requests

from bs4 import BeautifulSoup

import csv

1. Get the page content

url = "https://en.wikipedia.org/wiki/Python_(programming_language)"

headers = {'User-Agent': 'Mozilla/5.0'} # Simulate browser access

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, 'html.parser')

2. Extract core data

def scrape_wikipedia_page(soup):

data = {

"title": "",

"text": [],

"images": [],

"tables": [],

"links": []

}

Extract title

data["title"] = soup.find('h1', id='firstHeading').text

Extract the body text (excluding quote marks)

for paragraph in soup.select('div.mw-parser-output > p'):

text = paragraph.get_text().strip()

if text: # Skip empty paragraphs

data["text"].append(text)

Extract the image (get src and alt text)

for img in soup.select('div.mw-parser-output img'):

data["images"].append({

"src": img.get('src', ''),

"alt": img.get('alt', ''),

"caption": img.find_parent('figcaption').text if img.find_parent('figcaption') else ""

})

Extract the table (including the information box)

for table in soup.select('table'):

rows = []

for row in table.find_all('tr'):

cells = [cell.get_text(strip=True) for cell in row.find_all(['th', 'td'])]

if cells:

rows.append(cells)

if rows: # Skip empty tables

data["tables"].append(rows)

Extract internal links (only links within Wikipedia)

for link in soup.select('div.mw-parser-output a[href^="/wiki/"]'):

if ':' not in link['href']: # Filter special pages (such as "File:")

data["links"].append({

"text": link.get_text(strip=True),

"url": "https://en.wikipedia.org" + link['href']

})

return data

3. Execute the scrpe

page_data = scrape_wikipedia_page(soup)

4. Print some results examples

print(f"Title: {page_data['title']}\n")

print("First two paragraphs of text:")

for i, text in enumerate(page_data['text'][:2], 1):

print(f"{i}.{text[:150]}...") # truncate and display

print("\nThe first two pictures:")

for img in page_data['images'][:2]:

print(f"Description: {img['alt']}\nLink: https:{img['src']}") # Complete the protocol

print("\nThe first two rows of the first table:")

for row in page_data['tables'][0][:2]: # Usually the first one is the information box

print(row)

print("\nFirst five links:")

for link in page_data['links'][:5]:

print(f"{link['text']}: {link['url']}")

5. Save as CSV file (Example: Save link data)

with open('wikipedia_links.csv', 'w', newline='', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow(['Link Text', 'URL'])

for link in page_data['links']:

writer.writerow([link['text'], link['url']])

What are the risks of web scraping?

Copyright issues : Content on web pages is usually protected by copyright. Unauthorized scrping and use of copyrighted data may constitute infringement and require corresponding legal liability.

Violation of website terms of use : Many websites explicitly address scraping in their terms of use , and violating these terms can result in legal action or being removed from access to the site.

Anti-scrpeer mechanism : Frequent requests may cause the IP address to be abnormal by the network , thus interrupting the data scrping process. The structure of Wikipedia pages is complex, containing a large amount of dynamic content and nested elements, which makes it difficult to accurately extract the required data.

Data accuracy : Wikipedia content is edited by users. Although there is an audit mechanism, there may still be errors, incomplete or outdated information. If the captured data is not further verified and cleaned, it may mislead subsequent research or analysis.

Wikipedia pages may be updated frequently , and the scrpeed data may become outdated in a short period of time. It needs to be re-scrpeed regularly to keep the data up to date.

Therefore, when scraping Wikipedia web data, one must act with caution, fully consider these processes and risks, and take appropriate measures to ensure the legality and sustainability of data scraping.

Conclusion

Web scraping is a powerful technical tool, especially when it comes to obtaining open knowledge bases such as Wikipedia, which can provide valuable data support for academic research, business analysis, and innovative applications. However, this process involves many challenges in terms of law, technology, ethics, etc., and needs to be operated with caution.

If you need professional web scrping services, you might as well try LunaProxy. We provide efficient scrping services for individuals and businesses, ensuring that you are not limited to traffic processing and obtain accurate data information.